What is web-scraping?

Web-scraping is the automated collection of information from webpages.

The authors of “Automated Collection with R. A Practical Guide to Webscraping and Text Mining” explain the process very clearly:

“The Web consists predominantly of unstructured text.

One of the central tasks in web scraping is to collect the relevant information for our research problem from heaps of textual data. Within the unstructured text we are often interested in systematic information – especially when we want to analyze the data using quantitative methods.

Systematic structures can be numbers or recurrent names like countries or addresses.

We usually proceed in three steps:

First we gather the unstructured text,

second we determine the recurring patterns behind the information we are looking for, and

third we apply these patterns to the unstructured text to extract the information.”

(Munzert et al., 2015)

Why would you do web scraping?

As I quickly discovered, use cases range from business to government research, to academia.

Imagine you are:

- A hotel manager.

It’s Christmas and you might want to compare your room prices with the ones set by other hotels.

Are the other hotels pricing up the rooms?

By how much?

- A fashion buyer.

You might want to download images of clothes in this fashion season.

What’s the current fashion color trend?

You might want to set up an automated process to go through several websites. Some macros might help you do it.

Academia uses web scraping, too.

Here’s what I am personally more interested in.

Academia has been conducting research using web scraping backed up by theory.

- Psychological research, Big Data, and web scraping

One example:

Landers, R. N., Brusso, R. C., Cavanaugh, K. J., & Collmus, A. B. (2016). A primer on theory-driven web scraping: Automatic extraction of big data from the Internet for use in psychological research. Psychological Methods, 21(4), 475-492.

The authors mention “theory-driven web scraping in which the choice to use web-based big data must follow substantive theory.”

- Business research:

In the Journal of Planning Education and Research, Boeing and Waddell used web scraping in “New Insights into Rental Housing Markets across the United States. Web Scraping and Analyzing Craigslist Rental Listings”

Current sources of data on rental housing—such as the census or commercial databases that focus on large apartment complexes—do not reflect recent market activity or the full scope of the US rental market. To address this gap, we collected, cleaned, analyzed, mapped, and visualized eleven million Craigslist rental housing listings.

(Boeing and Waddell, 2017)

What about the Office of National Statistics?

The ONS has a webpage on its website dedicated to it.

Its Web-scraping policy clarifies why it uses web scraping and for which research purposes.

This policy sets out the practices and procedures that Office for National Statistics (ONS) staff will follow when carrying out web-scraping or using web-scraped data.

We are committed to using new sources of data to produce statistics, analysis and advice, which help Britain make better decisions.Driven by this strategic imperative, Office for National Statistics (ONS) staff may use web-scraping as a data collection mechanism.

Here are some of the mentioned use cases:

|

Example 1

|

Example 2

|

|

Example 3

|

Example 4

|

(Source: ONS)

Web scraping or web crawling?

Whenever you scrape more than one page on a single page or multiple websites, the term I have found for it is “web crawling”.

What about social media?

It doesn’t really make sense to web scrape social media platforms (e.g. Twitter feeds) . All these platforms offer APIs.

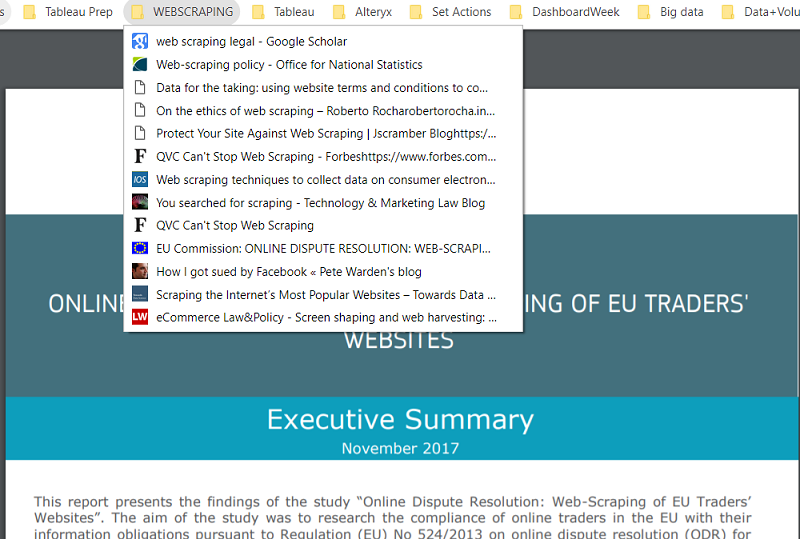

Is web scraping legal?

Just starting…

I was very intrigued by this question.

As usual, I quickly went down the rabbit hole of the internet and realized that I can in no way properly answer it.

Terms of Use

My personal civic tech project entailed web scraping an Italian government website.

The Terms and Conditions, as for many government websites, were quite open.

Italian Ministry of Justice website – (translated) Terms and Conditions

“Textual information and multimedia elements are owned by the Ministry of Justice and their authors and those entitled. The user of the contents of this site is bound to respect the intellectual property rights of the Ministry of Justice and third parties.

The use, reproduction, extraction of copies, distribution of textual information, multimedia elements and knowledge available on this site are authorized only to the extent that they occur in compliance with the public interest in information, for non-commercial purposes, ensuring the integrity of the elements reproduced and by indicating the source. The administration identifies the hypotheses of possible use also for commercial purposes.

The use, reproduction, extraction of copies and the distribution of some data may be subject – if the conditions laid down by the law occur – to different conditions such as the consent of the persons photographed, filming or cited.”

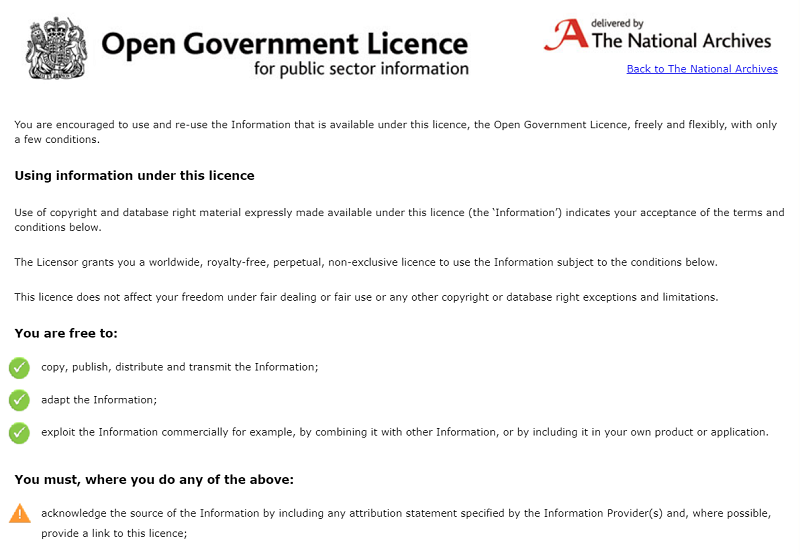

Gov.uk’s Terms and Conditions

Most content on GOV.UK is subject to Crown copyright protection and is published under the Open Government Licence (OGL).

Some content is exempt from the OGL – check the list of exemptions.

Departmental logos and crests are also exempt from the OGL, except when they form an integral part of a document or dataset.

If any content is not subject to Crown copyright protection or published under the OGL, we’ll usually credit the author or copyright holder. You can reproduce content published on GOV.UK under the OGL as long as you follow the licence’s conditions.

Open Government License

The business world works quite differently. Companies sometimes explicitly mention “scraping” as a forbidden practice.

In the vast world of Conference Proceedings, I found a paper on the Legality and Ethics of Web Scraping at AMCIS2018.

Automatic retrieval of data from the Web (often referred to as Web Scraping) for industry and academic research projects is becoming a common practice. A variety of tools and technologies have been developed to facilitate Web Scraping. Unfortunately, the legality and ethics of using these Web Scraping tools are often overlooked. This work in progress reviews legal literature together with Information Systems literature on ethics and privacy to identify broad areas of concern together with a list of specific questions that need to be addressed by researchers employing Web Scraping for data collection. Reflecting on these questions and concerns can potentially help the researchers decrease the likelihood of ethical and legal controversies in their work. Further research is needed to refine the intricacies of legal and ethical issues surrounding Web Scraping and devise strategies and tactics that researchers can use for addressing these issues.

I am looking forward to reading a book that addresses this whole topic in depth.

Which tools do you need?

The web offers several tools that you can use. Most of them are free for limited use and offer paid plans for more advanced tasks.

R and Python are available, too. However, you should be trained in coding and comfortable using them.

In the next post, we are going to look at a practical example together using Alteryx.

You don’t have Alteryx and would like to try it? Install the trial version here.

Are you a student or an academic? Do you work in the nonprofit sector? Then, check out this page.

What to download?

We are going to use:

- Alteryx Designer

- The Developer tools available both on Chrome and Firefox.

- Sublime text or the text editor you prefer.

Check out my second blog post Web Scraping 101: A guided example in Alteryx – Part 1.

References

- Association for Information Systems (AIS): Legality and Ethics of Web Scraping

https://aisel.aisnet.org/amcis2018/DataScience/Presentations/17/ - Boeing, G., & Waddell, P. (2017). New Insights into Rental Housing Markets across the United States: Web Scraping and Analyzing Craigslist Rental Listings. Journal of Planning Education and Research, 37(4), 457–476. https://doi.org/10.1177/0739456X16664789

- Munzert, Simon & Rubba, Christian & Meissner, Peter & Nyhuis, Dominic. (2015). Automated data collection with R: A practical guide to web scraping and text mining. https://onlinelibrary.wiley.com/doi/book/10.1002/9781118834732

- vanden Broucke S., Baesens B. (2018) From Web Scraping to Web Crawling. In: Practical Web Scraping for Data Science. Apress, Berkeley, CA. https://doi.org/10.1007/978-1-4842-3582-9_6

*******************

Credits: