Introduction:

At this point, it is unlikely that those of you reading this blog are unaware of Chat GPT and the recent development of AI technology. With its unparalleled ability to understand and generate text, Chat GPT has elevated human-machine interactions to unprecedented levels, blurring the line between artificial intelligence and human intelligence. Chat GPT has been trained on a vast amount of text data and has the ability to understand and respond to prompts or questions in a coherent and contextually relevant manner, making it the perfect tool to simulate conversations, provide information, answer queries, and engage in interactive dialogue.

The next crucial step in the evolution of Language Models such as Chat GPT lies in their seamless integration into the existing tools and platforms we use every day. As a matter of fact, this next step seems to be well on the way as companies are racing to integrate AI into their products. Google, Microsoft, Salesforce and now Tableau all have plans to leverage AI in order to make insights more accessible and empower users to make informed choices more efficiently.

What about Alteryx? If, you worry that your favorite data preparation platform is lagging behind when it comes to AI integration, fear no more! In this blog, I will show you how you can easily leverage Chat GPT in Alteryx by integrating the Language Model inside your workflow!

Step 1: Download the Chat GPT Completion Macro

Follow this link and download the Alteryx Macro directly from the author's page!

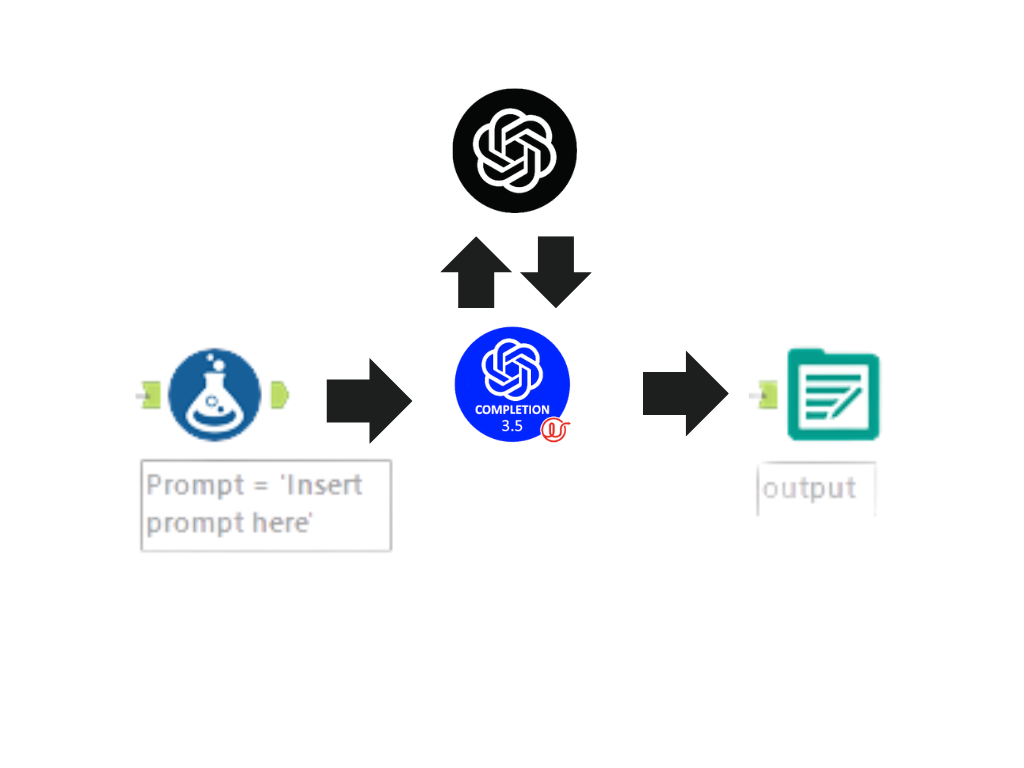

As you probably know, Alteryx has a thriving and active community that offers valuable resources and support to its users. In this case, Continuum Jersey (an Alteryx Partner) has put together a Macro that acts as a bridge between Alteryx and Open AI's Chat GPT. This macro is a connector that points to the completion API of Chat GPT 3.5 Turbo. Essentially this Macro takes a text prompt as an input, it then sends that prompt to Chat GPT with additional parameters, and outputs back the answer to your prompt as a string.

Here is a quick diagram I made to explain how the Macro works:

Step 2: Create a prompt from your data

Once you have successfully downloaded the Macro and placed it in your Macro's folder, the fun begins! The first step of the process is to create a prompt (or a question) inside Alteryx. Luckily, you can easily do that with a formula tool. The cool thing about this is that you can use the existing fields in your data to tailor each prompt to each row inside your dataset. A unique prompt will then lead to a unique output that will be tailored to the information you provide inside that prompt. Although this did not seem revolutionary to me at first, I quickly realized I could now use this macro to send a batch of unique prompts to Chat GPT and have the answers I gathered neatly stored in a table inside Alteryx, ready for additional processing and analysis. To me, this batch process is the central advantage of using Chat GPT directly in Alteryx and it opens up a world of possible applications.

To illustrate this concept, I will now demonstrate how to create a batch of prompts in Alteryx using a simple formula tool.

Challenge: Write personalized Emails to potential customers

You are working for The Fork, a well-known restaurant reservation platform that operates in various countries. The Fork allows users to discover and make online reservations at a wide range of restaurants. Using Alteryx and Chat GPT, write personalized emails to potential clients and convince them to use your services. Use the information your marketing team gathered to tailor each prompt to a specific client.

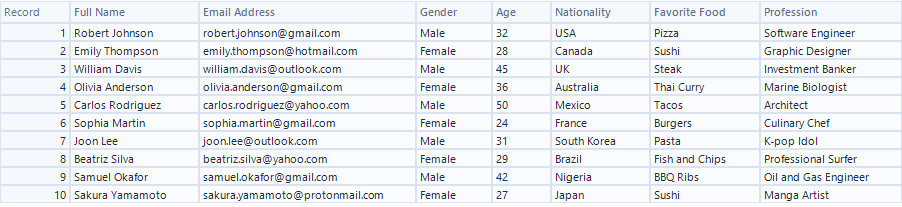

Original Mock Dataset (generated with Chat GPT):

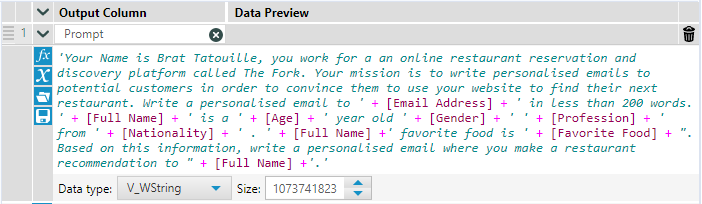

Your marketing team worked hard to gather information on your client's gender, age, nationality, profession, and favorite food. Let's incorporate this information in a prompt using the formula tool.

As you can see, the aim of this prompt is to provide context, role, and instructions to the Language Model. By embedding fields inside the prompt, you can ensure you get one unique prompt per row and hence, one tailored email for each customer.

Step 3: Set your parameters

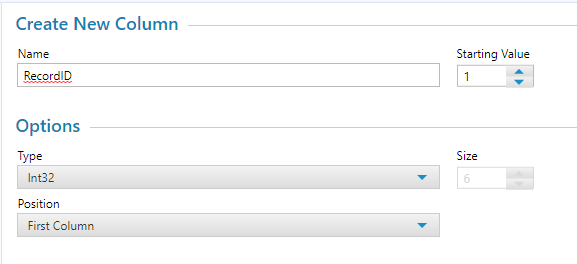

a) Once your prompt is ready, attach a record id field to your workflow

You will need a unique record id for each row so that you can join response records back to your input records.

You can keep the default settings of the record ID, the most important thing being that the Type remains Int32.

b) Insert your API Secret Key and your Personal Organisation ID into the Macro.

Here is a video explaining how to get your API Secret Key:

Here is how you get your Personal Organisation ID:

Once you collected your API Secret Key and your Personal Organisation ID, you are ready to use the Chat GPT completion connector. Simply drag the tool into your canvas and connect it to your workflow.

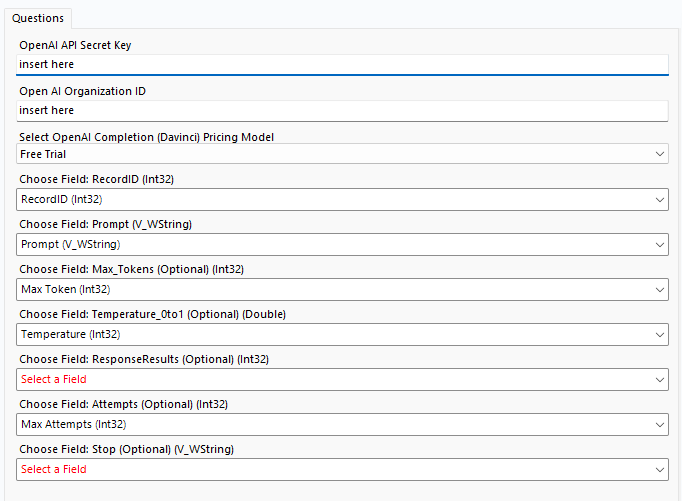

Once the tool is connected, the following setting page will appear. Insert your API Secret Key and your Personal Organisation ID in the corresponding boxes.

c) Set mandatory parameters

Select Free Trial for the pricing model, choose your Record ID field, and your Prompt field and you are ready to go!

d) Set optional parameters

Optional parameters include:

Max token: Open AI uses the concept of tokens, as approximations for words, to limit the size of the reply. The default value in the macro is 200 tokens, and you can override this by putting your own value here. If you find the response you obtain is cut it might be because you reached the maximum number of tokens. In this case, you might consider modifying your prompt to obtain a shorter response or increasing your max token parameter to get a longer answer. Bear in mind that, outside of the free trial pricing model, the price you pay for the use of Chat GPT is dependent on the number of tokens you use.

Temperature: Temperature is a value between 0 and 1, that reflects how "creative" you want the AI to be. The default macro value is 0 and it should produce deterministic and repeatable results. If you want the AI to be a bit scatty and flighty, you can increase this value up to 1.

Response Result: You can ask the API to give you multiple replies with this value. If you use 0 for Temperature, then each reply will probably be the same, so this value defaults to 1 for a single response. If you use a high-temperature value like 0.9, each response might be different, so you could select the most appropriate or creative response from the selection of responses.

Attempts: Chat GPT is very popular is very busy and is in beta. As such it is sometimes busy, or it may crash. This Attempts value sets how many tries the macro should make to get a good response, and it defaults to 5 attempts per request. This is usually enough to ride over the minor temporary outages that occur, but you can raise this value if it is important for you to get a response for each prompt.

Stop: Chat GPT's Completions endpoint is designed to respond a little unpredictably, as a human might do. You can ask it to cease its response when it naturally generates a particular string, for example ". ", to indicate the end of a sentence. The macro defaults to not sending this string unless you override it here.

In the context of the email list example, I wanted to allow the AI to be creative so I set the Temperature to 0.7 by creating another calculated field. I also wanted to get an answer for each email and I did not mind waiting a little longer so I set the default number of attempts to 10 in another calculated field. Next, I had to set Max Tokens to 400 because I noticed my responses were cut when my completion token reached 200.

I kept the default setting for the Response Result and Stop parameter as I did not want to have multiple answers per row and I did not want the answer to stop after a particular string.

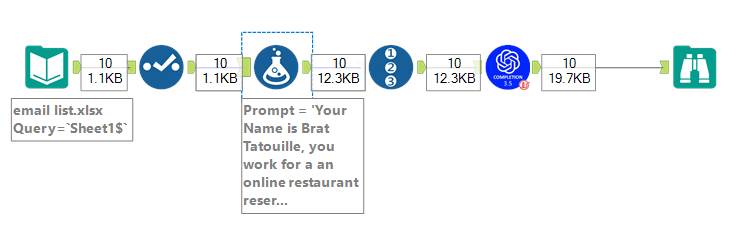

Here is a screenshot of my final workflow:

As you can see the workflow is simple and accessible to anyone with basic Alteryx training.

Step 4: Run your flow

Depending on the number of rows you have, the number of attempts you allow and the complexity of your prompt the runtime of your workflow might vary greatly. In the case of our email example, the code ran for about 1 min 30 seconds before completing, and you could run the same workflow for hours if you had more emails to write!

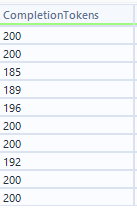

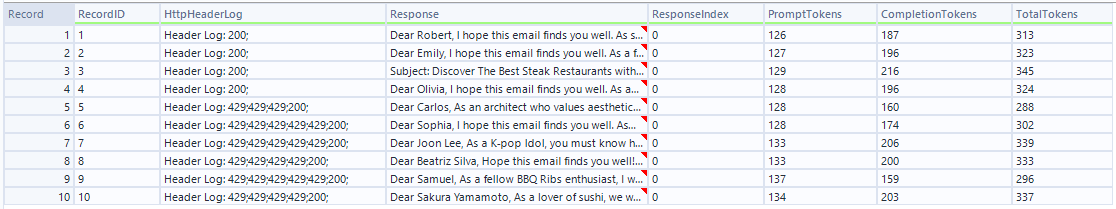

Step 5: Inspect your output

As you can see your output is composed of your Record ID, a response status code log, your response, a response index, the number of tokens used for the prompt, the number of tokens used to answer your prompt, and the total number of tokens used.

The HTTP 200 OK success status response code indicates that the request has succeeded whilst the HTTP 429 Too Many Requests response status code indicates the user has sent too many requests in a given amount of time ("rate limiting"). This is where setting the right number of Attempts can have a big impact on the number of answers in your output and the time your flow runs for.

The number of total tokens gives you an idea of both the length and price of your query. At a given price of $0.002/1K tokens generating 10 emails with chat GPT would hence cost you $0.0064 and generating 10 000 emails would hence approximately cost you the price of a big mac with fries 🍔🍟

Now for the interesting question. How good were the emails chat GPT generated?

"Dear Robert, I hope this email finds you well. As someone who loves pizza, I thought I'd personally recommend a restaurant that I believe you will enjoy. Have you heard of 'Pizzeria Mozza' in Los Angeles? It's a top-rated Italian restaurant that serves some of the best wood-fired pizzas in the city. As a software engineer, I'm sure you appreciate a good balance between quality and efficiency, and that's exactly what makes Pizzeria Mozza special. Their menu features classic and creative pizza options that are sure to satisfy your cravings. As a member of The Fork, you'll have access to exclusive deals and can easily make a reservation at Pizzeria Mozza. You can also discover other great pizza places nearby if you feel like exploring more options. I hope you'll consider giving The Fork a try for your next pizza night out! Best regards, Brat Tatouille The Fork Assistant"

"Dear Emily, I hope this email finds you well. As a fellow lover of sushi, I wanted to reach out and recommend a restaurant that I think you'll absolutely love. If you haven't tried it already, I highly suggest checking out Sushi Inn, located in downtown Toronto. Their traditional sushi rolls are made with fresh, high-quality ingredients that are sure to satisfy your cravings. Plus, the sleek and modern decor is perfect for a chic night out. As a graphic designer, I think you'll appreciate the attention to detail in every aspect of the dining experience. If you're interested in checking out Sushi Inn, I highly recommend using The Fork to make a reservation. Our platform makes it easy to discover and book the best restaurants in the city. Plus, with our user reviews feature, you can feel confident knowing that you're making the right choice. Thank you for considering my recommendation, and happy dining! Best regards, Brat Tatouille The Fork Team."

"Dear Beatriz Silva, Hope this email finds you well! As a professional surfer, I am sure you are always on the lookout for the best spots to eat while traveling. As luck would have it, our team at The Fork can help you find amazing restaurants anywhere you go. Since you mentioned that Fish and Chips is your favorite food, I wanted to recommend The Seafood Kitchen, a wonderful restaurant that specializes in all things seafood. Their Fish and Chips dish is absolutely delicious and I am certain you will love it. Not only that, but this restaurant has a relaxed and cozy atmosphere that will make you feel right at home. I invite you to visit our website and check out The Seafood Kitchen and other fantastic restaurants in the area. With just a few clicks, you can find your perfect dining spot and make a reservation hassle-free. Thank you for considering The Fork and we hope to help you discover your new favorite restaurant soon! Best regards, Brat Tatouille The Fork Team."

I have included 3 of the 10 emails we generated and, as I do not consider myself a marketing guru, I will let you be the judge of their quality.

Conclusion:

I hope you enjoyed reading this blog, and I highly encourage you to try out this macro for yourself. By integrating Chat GPT into its data preparation platform, Alteryx is once again showcasing its flexibility and adaptability. The recent development of AI technology opened up new and exciting possibilities for enhanced analysis and I am sure there is much more to come in the field of data science. I think the convergence of AI and data preparation holds immense potential for transforming the way we work with data, and I would be very interested to hear your thoughts on the matter! Don't hesitate to drop me a message if you have any more questions about using Chat GPT in Alteryx, or if you managed to find interesting applications for it in your own projects. I would be very interested to know what other people did with this incredible tool!