Introduction: AI prompting refers to the process of using artificial intelligence models like GPT, to generate text based on a given input or prompt. Creating a mock dataset can be super helpful for a bunch of reasons. AI prompting is commonly used for applications such as chatbots, content creation, language translation, and more. The generated text can be highly relevant and accurate, and the process is much faster and more efficient than manual writing or data entry.

In this blog, I will use Chat GPT to build a mock dataset describing the hourly electricity production of a solar farm. Through this example, I hope to demonstrate how efficient language models can be at rapidly producing realistic datasets when the right prompts are used to guide the model in the right direction. Feel free to follow along or experiment with building your own mock dataset using chat GPT!

To create mock data on Chat GPT, I found the most effective method contained 3 main steps:

Step 1: Initial prompt

The first step consists of laying out some background information on who you want the language model to impersonate and what problem you want it to solve. It is important to think about the problem you are trying to solve in order to create an interesting mock dataset with intricate stories. Then, ask the AI to think about what field value it would need to answer that question using data.

More generally, your prompt can take the following form:

"You are a [...] expert with 20 years of experience in [...]. You are responsible for [...] in [...] What information would you need to [...]."

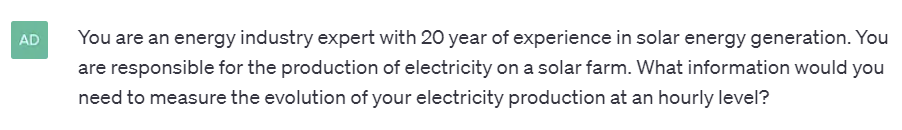

You will be surprised by the insights you can gain by prompting Chat GPT to think about the information he needs to solve a problem on his own. In my use case, here is the answer I obtained from my initial prompt:

Chat GPT impersonated the industry expert and came up with information I did not know I would even need to answer my initial question.

Step 2: Generate a mock data Frame

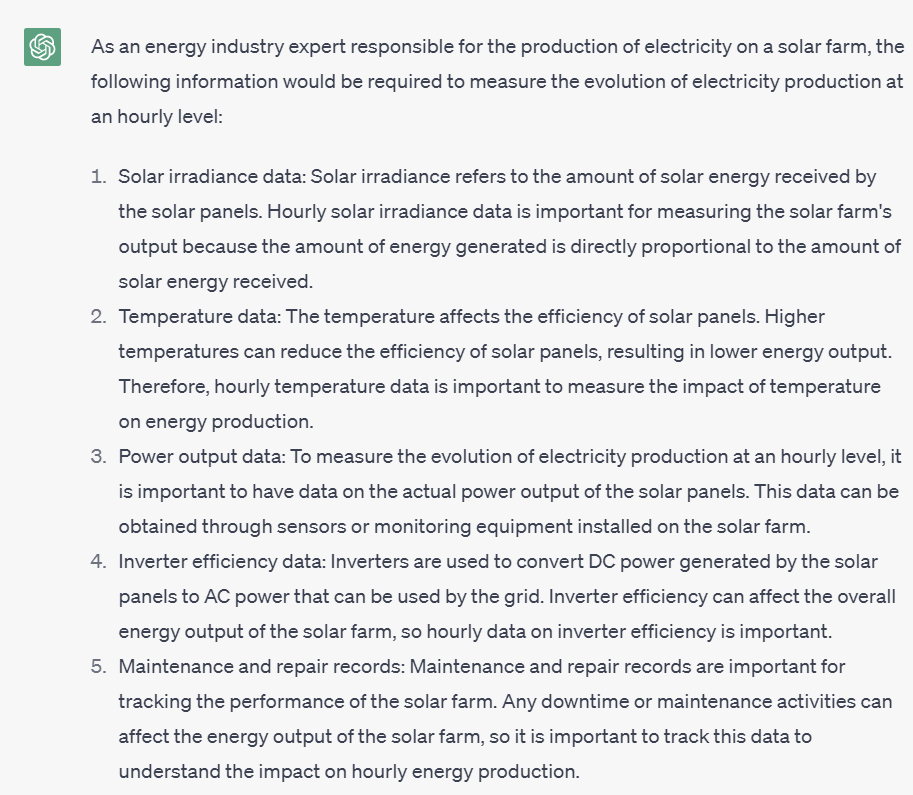

In this second step, the aim is to build a couple rows of data to have a frame of our final dataset. To do so, simply ask chat GPT to come up with a couple of rows of data based on the fields it just found.

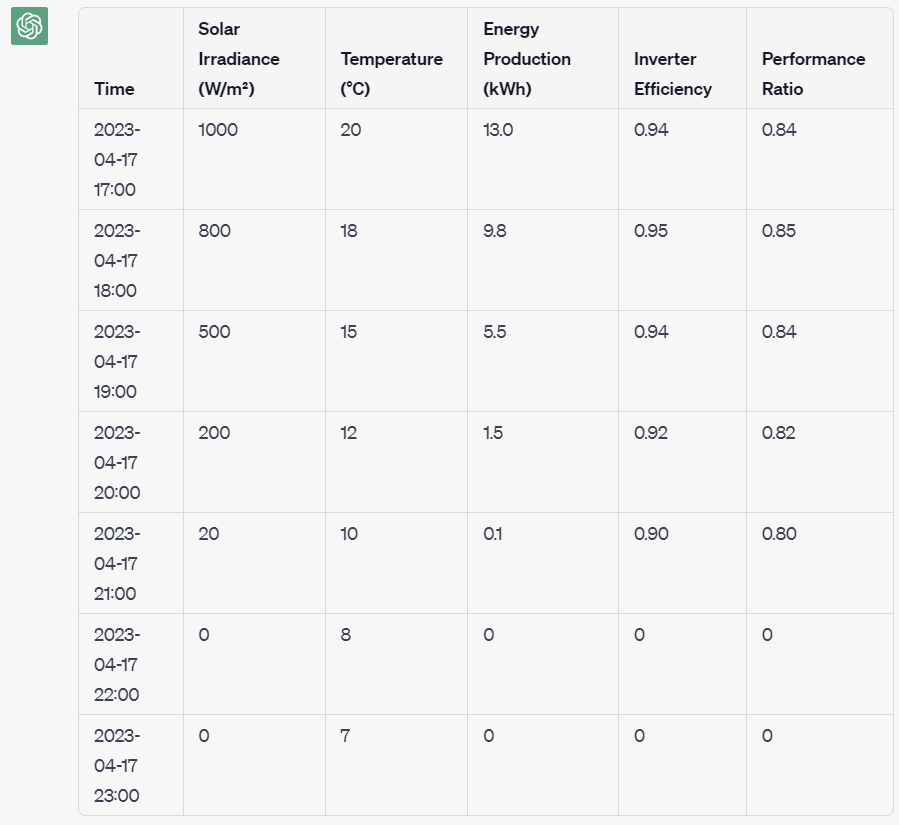

If everything runs smoothly, you should now see the frame of your mock dataset appear before you.

If you are satisfied with the data you obtained at this stage simply highlight the table with the headers, copy it, and paste it into Excel or google sheets.

At this point, you might come across the first hurdle of your mock data generation process. Indeed, Chat GPT's token system limits the size of the answer it can generate from a single query and this means that the size of the tabular dataset you can generate in one go is limited. To work around this limitation, simply ask Chat GPT to continue and it should carry on from where it stopped.

Obtaining the desired mock data frame might be an iterative process and you should not hesitate to ask Chat GPT to add, delete or modify information. Repeat the process until you are satisfied with the frame.

Step 3: Build the dataset using a Python Script

Once you are satisfied with the frame you built, the next step is to build this dataset in Python. Why is it important you might ask?

Transparency: Asking Chat GPT to write a Python script will enable you to open the infamous AI black box and understand what the language model is actually doing to create your dataset. This is very important if you are going to share your work with anyone or if you want to explain how you built the dataset you are using.

Reproducibility: If you ask Chat GPT the same question multiple times, its answer will change slightly each time. This is a problem you do not need to worry about if you are using Python to generate your mock data. When you need your results to be reproducible, using Python is hence a safer way to go.

Modulability: Python offers great flexibility and enables you to incorporate a wide array of parameters, functions, and advanced options to build the exact dataset you want.

Precision: Being able to change the value of a single parameter is a very valuable feature that makes using a Python script more desirable.

Scalability: Creating your dataset with Python enables you to circumvent the token limitation we mentioned earlier. Indeed, it is far easier and faster to generate a large amount of data using loops in Python than it is to do using a language model.

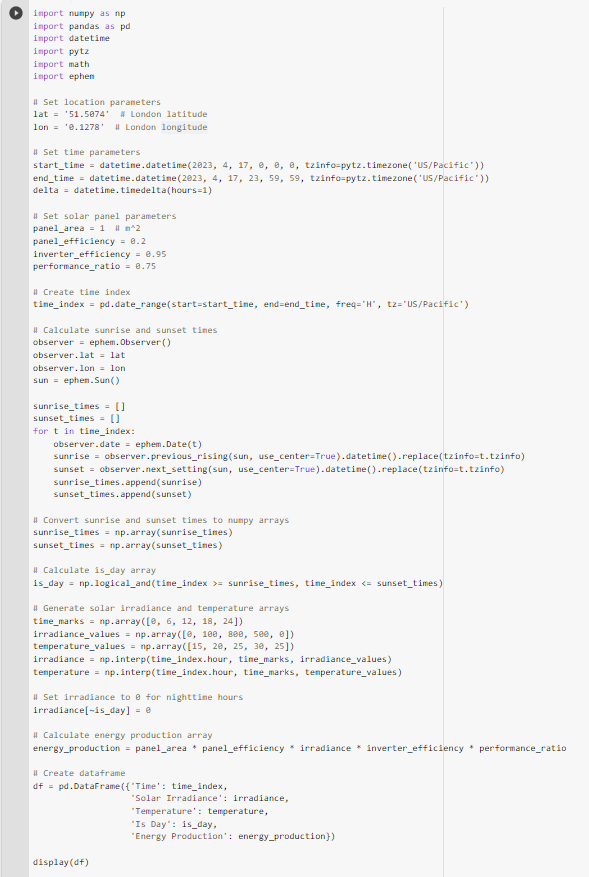

To write the Python script, simply ask Chat GPT to reproduce the dataset using Python and watch the language model come up with the code for you.

At that point, it is important to check that the code actually works and that it outputs the data you actually want. To do so, copy and paste the code from Chat GPT into a Python code editor such as google collab and run the code.

Language models are still progressing and you should not expect the code you initially generate to be flawless. Luckily, Chat GPT keeps your queries in memory which makes it easy to iteratively modify and improve the code it is writing. If you have sufficient Python knowledge, do not hesitate to modify your code yourself or guide Chat GPT toward a better answer.

Here are the main follow up queries I made to iteratively improve the Python script:

- Add a column to indicate if it is night or day. If it is night, solar irradiance should be 0.

- Great now make the IS DAY value realistic

- There appears to be a mistake in the IS DAY calculation because it always comes back as true. Fix the error and simplify the code.

For those of you who are interested, here is the final Python Script I generated.

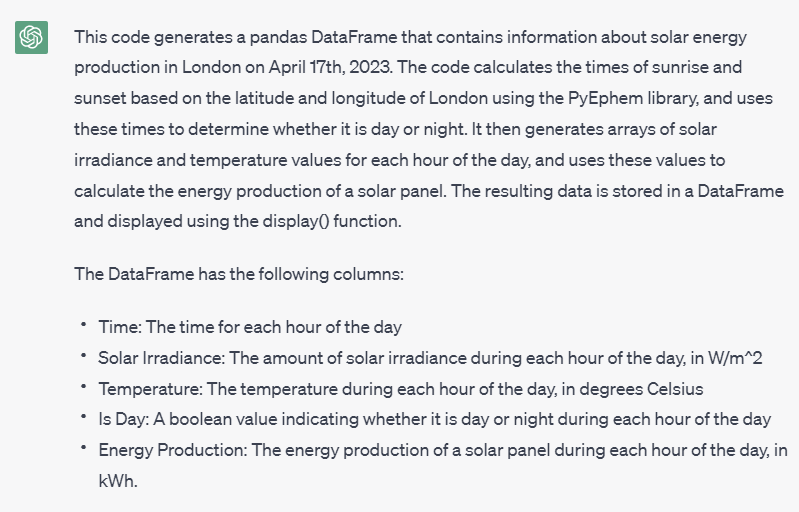

When asked, Chat GPT explains this Python Script in the following way:

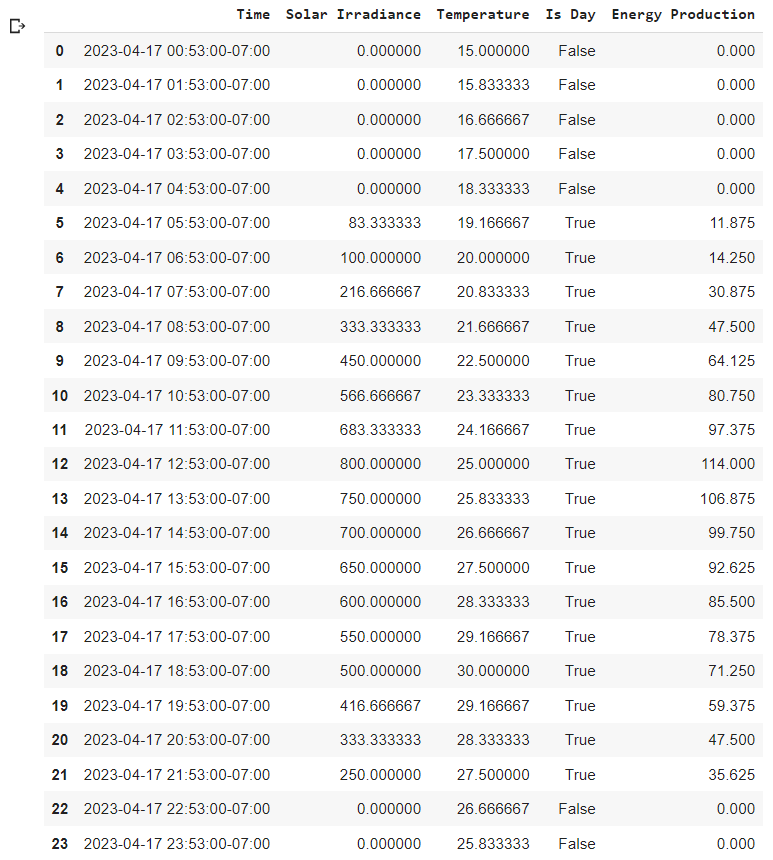

And here is the final mock dataset

Conclusion: In conclusion, AI prompting using language models like Chat GPT can be a powerful tool for generating realistic and efficient mock datasets. By following a few simple steps, we were able to create a mock dataset describing the hourly electricity production of a solar farm. We started by providing an initial prompt to guide the language model in the right direction, then generated a mock data frame, and finally wrote a Python script to build the final dataset. Throughout this process, we were able to interact with Chat GPT to refine our questions and iteratively improve the code it was generating.

While language models like Chat GPT are still evolving, they have the potential to transform the way we generate and work with data. By harnessing the power of artificial intelligence, we can save time, increase efficiency, and generate more accurate and relevant datasets. As language models continue to advance, we can expect AI prompting to become an even more integral part of the data generation process.